It is not intended as a fast or scalable solution for backing up Mysqldump isn't without it's fair share of problems. It is possible to use exec in php to run it but since producing the dump might take a long time depending on the amount of data, and php scripts usually run only for 30 seconds, you would need to run it as a background process. Ideally mysqldump should be invoked from your shell. The mysqldumpĬommand can also generate output in CSV, other delimited text, or XML It dumps one or more MySQLĭatabases for backup or transfer to another SQL server. Of SQL statements that can be executed to reproduce the originalĭatabase object definitions and table data. The mysqldump client utility performs logical backups, producing a set Mysqldump is superior to SELECT INTO OUTFILE in many ways, producing CSV is just one of the many things that this command can do. While this method is superior iterating through a result set and saving to a file row by row, it's not as good as using. The data that's exported in this manner can be imported into another database using LOAD DATA INFILE Though this query can be executed through PHP and a web request, it is best executed through the mysql console. The file is saved on the server and the path chosen needs to be writable. Here is a complete example: SELECT * FROM my_table INTO OUTFILE '/tmp/my_table.csv'įIELDS TERMINATED BY ',' OPTIONALLY ENCLOSED BY '"' Line terminators can be specified to produce a specific output format. INTO OUTFILE writes the selected rows to a file.

#Mysql workbench import database upgrade

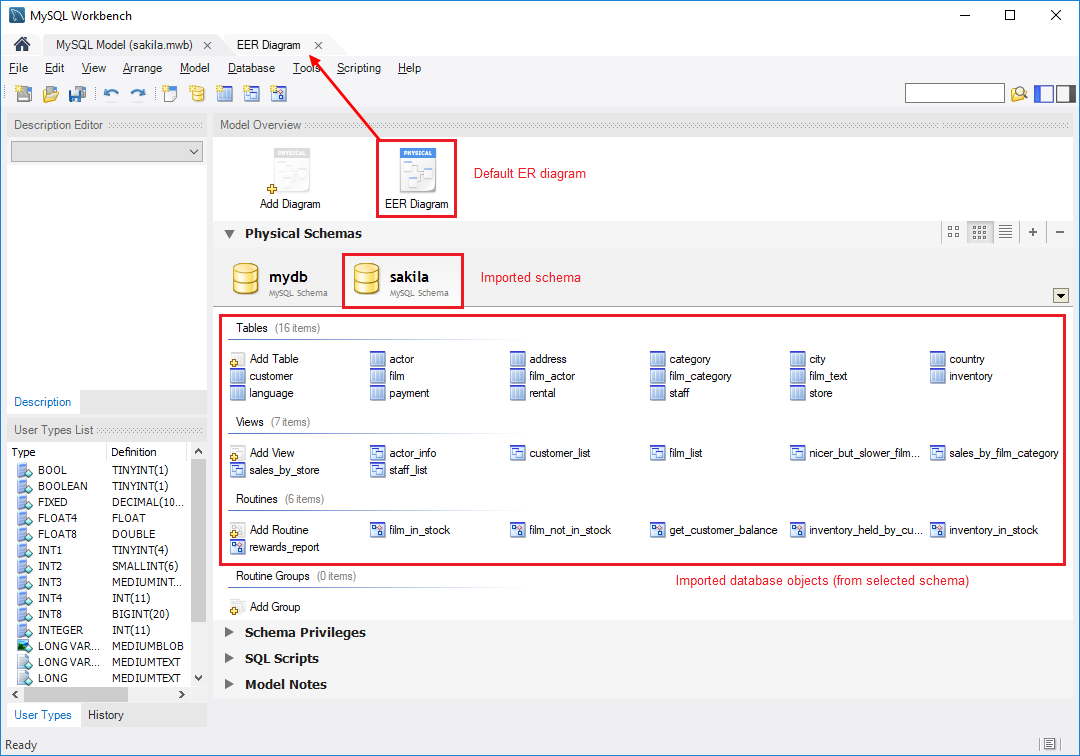

SIDE NOTE : You should upgrade to 5.6.21 for latest security patches.Automated or regular backup of mysql data CSV and SELECT INTO OUTFILE This reenables the InnoDB Double Write Buffer When done, restart mysql normally service mysql restart This disables the InnoDB Double Write Buffer Restart mysql like this service mysql restart -innodb-doublewrite=0 There are other advantages to setting 0 mentioned by a former Percona instructor. Setting 2 flushes the InnoDB Log Buffer only on commit. I choose 0 over 2 because 0 flushes the InnoDB Log Buffer to the Transaction Logs (ib_logfile0, ib_logfile1) once per second, with or without a commit.The tradeoff is that both 0 and 2 increase write performance.In the event of a crash, both 0 and 2 can lose once second of data.Default for MySQL is 4, 8 for Percona Server. According to MySQL Documentation on Configuring the Number of Background InnoDB I/O Threads, each thread can handle up to 256 pending I/O requests. innodb_write_io_threads : Service Write Operations to.innodb_log_file_size : Larger log file reduces checkpointing and write I/O.innodb_log_buffer_size : Larger buffer reduces write I/O to Transaction Logs.innodb_buffer_pool_size will cache frequently read data.You definitely need to change the following innodb_buffer_pool_size = 4G Percona's Vadim Tkachenko made this fine Pictorial Representation of InnoDB innodb_buffer_pool_size = 16M and innodb_log_buffer_size = 8M.Server stats during the import (from MySQL Workbench).Setting innodb_flush_log_at_trx_commit = 2 as suggested here seems to make no (clearly visible/exponential) improvement.I do not have access to the original server and DB so I cannot make a new back up or do a "hot" copy etc.The tables are all InnoDB and there are no foreign keys defined.So my question is, is there a faster way to do this? Further Info/Findings So, it seems that the whole thing would take 600 hours (that's 24 days) and is impractical. There is a table with around 300 million rows, it's gotten to 1.5 million in around 3 hours. I did the usual: mysql -uroot dbname < dbname.sql I haven't had to import such a huge SQL dump before. I have this huge 32 GB SQL dump that I need to import into MySQL.

0 kommentar(er)

0 kommentar(er)